Now, that’s what a prequel should be like!

As a young girl, Jyn Erso sees her mother shot and her engineer father taken by Imperial troopers.

A decade later she is an embittered young criminal, on the run from everyone.

The Empire wants her to put pressure on her father.

The Rebellion wants her to stop the Empire getting her.

Then a pilot defects from the Empire with news that could mean a new hope for the Rebellion.

Jyn Erso, along with a motley crew of rebels, finds herself engaged in a desperate race against time.

This is written for, and by, Star Wars original trilogy fans.

There are lots of little call outs to events in those films,

and a couple of space battles in the original style.

The plot explains two big questions from the original:

How did Leia get hold of the Death Star plans?

Why did the Death Star have such a ridiculous weakness?

And it manages this without introducing too many new questions,

although it does introduce a new series of gloomy muddy planets.

Nowadays it is a bit harder to fully engage with an armed insurrection

quite as whole-heartedly as in the more innocent days of 1977, though.

One great piece of continuity is Peter Cushing reprising his role as Grand Moff Tarkin from the 40-year old original.

Not a bad trick given that Peter Cushing died in 1994.

That’s what CGI can do nowadays: take one actor, and plaster the face of another over the top.

The face worked brilliantly, but the eyes seemed a bit glassy.

They played the same trick at the end with Leia, but that was more uncanny valley territory:

a grizzled old face in the gloom is easier to fake that a young unlined face in full light, it seems.

Mom Mothma also recurs, recast with a good look-alike.

Darth Vader was a bit easier to recast behind that black mask, but still has his great James Earl Jones voice.

Speaking of Leia and Mom Mothma, does this film, with its female lead,

pass the Bechdel test?

Opinion is divided:

the young Jyn does briefly talk with her mother at the beginning,

but they are talking about her hiding from the men coming for them.

Later, Jyn addresses the Rebellion council headed by Mom Mothma.

Are they talking to each other, or is Jyn just addressing the assembled, overwhelmingly male, crowd?

Even if it can be argued a technical pass, it does needs to be argued.

The crew Jyn flies with is all male.

About the only other women are another council member, and a couple of fighter pilots, who get a few lines.

Would it have killed them to have had another woman in the crew?

Or even to have had Jyn’s mother be the abducted engineer?

Ah well. Baby steps.

Technically, and plot-wise, the film is great.

Not as good as the original (but what is?), but much superior to the prequel trilogy.

(We probably should have watched it in the cinema to better appreciate the space battles and mushroom clouds, though.)

The plot of Rogue One finishes just before that of Star Wars starts.

It’s a very satisfying prequel that adds to the canon.

For all my film reviews, see

my main website.

Sunday 31 December 2017

Saturday 30 December 2017

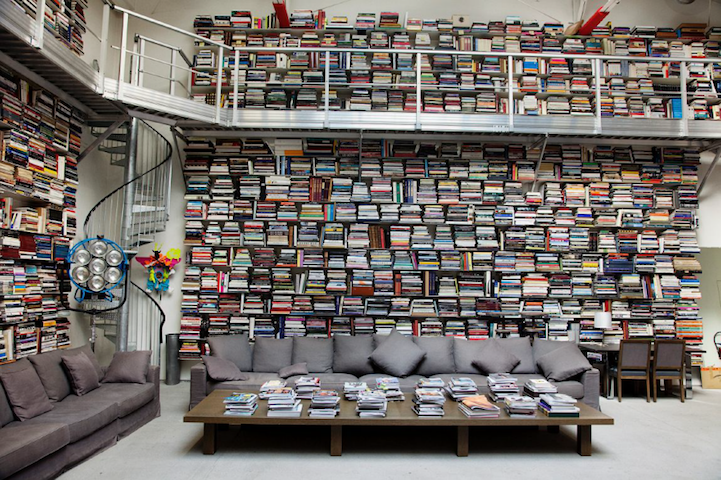

books, books, everywhere you looks

Labels:

books

10 Famous Book Hoarders

|

| Karl Lagerfeld: 300,000 books |

For all my social networking posts, see my Google+ page

Thursday 28 December 2017

fleshly material-semiotic presences, anyone?

Continuing strange recommendations by Amazon include a book who’s description includes the phrase "dogs are fleshly material-semiotic presences in the body of technoscience". Don’t judge a book by its blurb?

For all my social networking posts, see my Google+ page

For all my social networking posts, see my Google+ page

Tuesday 26 December 2017

against a dark background

Labels:

Christmas

The Christmas jigsaw event is complete for another year. This year’s task: the 1000-piece Highland Glen. The puzzle was bought from a charity shop, and we discovered it is actually the 999-piece Highland Glen. Can you spot the missing piece? (This is an unretouched photo, but we did do something to the jigsaw before snapping it.)

If you need a clue, we slid an appropriately coloured piece of paper under the puzzle.

If you need a further clue, look at the post title.

If that doesn’t help, here’s a shot without that piece of paper.

If you need a clue, we slid an appropriately coloured piece of paper under the puzzle.

If you need a further clue, look at the post title.

If that doesn’t help, here’s a shot without that piece of paper.

Monday 25 December 2017

Christmas done

Labels:

books,

Christmas,

Doctor Who,

food

So that’s all the Christmas Day traditions done for another year:

- exchanging presents

- eating turkey

- watching The Queen

- watching the Doctor Who Christmas special (with two regenerations for the price of one! excellent!)

Thursday 21 December 2017

Wednesday 20 December 2017

tree by night

Christmas comes but once a year. But when it comes, it’s accompanied by trees, and lights, and twinkly stuff.

Friday 15 December 2017

sequestering carbon, several books at a time LXXVIII

Labels:

books,

complexity,

computer,

history,

mathematics,

politics,

psychology,

science,

science fiction

The latest batch. The next batch will appear Monday week, being those that have been smuggled into the house over the last month or so.

This batch includes Inspired by Nature, and Introduction to Coalgebra, a review copy, which means I actually have to read it! The Professional Git is for my other half, who, in his SysAdmin role, has decided we need our own Git repository on our internal network.

This batch includes Inspired by Nature, and Introduction to Coalgebra, a review copy, which means I actually have to read it! The Professional Git is for my other half, who, in his SysAdmin role, has decided we need our own Git repository on our internal network.

Tuesday 12 December 2017

seeing is believing - not

Mind. Blown.

[h/t Danny Yee's blog]

For all my social networking posts, see my Google+ page

The Remarkable “Curvature Blindness” Illusion

[h/t Danny Yee's blog]

For all my social networking posts, see my Google+ page

Monday 11 December 2017

Sunday 10 December 2017

imprints of birds

Saturday 9 December 2017

Generators have state

Labels:

graphics,

mathematics,

prime numbers,

python

Python’s generator expressions (discussed in two previous posts) are very useful for stream programming. But sometimes, you want some state to be preserved between calls. That is where we need full Python generators.

A generator expression is actually a special case of a generator that can be written inline. The generator expression

$$ T_N = \sum_{i=1}^N x_i$$ We can instead write this sum as a recurrence relation: $$ T_0 = 0 ; T_n = T_{n-1} + x_n$$ Expanding this out explicitly we get $$T_0 = 0 ; T_1 = T_0 + x_1 = 0 + x_1 = x_1 ; T_2 = T_1 + x_2 = x_1 + x_2 ; \ldots$$ +++T_0+++ is the initial state, which is observed directly. The recurrence term +++T_n+++ tells us what needs to be remembered of the previous state(s), and how to use that to generate the current value.

The logistic map is a famous difference equation, exhibiting a range of periodic and chaotic behaviours, depending on the value of its paramenter +++r+++. $$x_0 \in (0,1) ; x_{n+1} = r x_n(1-x_n)$$

Let $$F_{k-1} = \frac{((k-1)!)^2 \, 2^{k}}{(2k-1)!}$$ Then $$\begin{eqnarray} F_{k} &=& \frac{((k)!)^2 \, 2^{k+1}}{(2k+1)!} \ &=& \frac{((k-1)! k)^2 \, 2 \times 2^{k}}{(2k-1)!(2k)(2k+1)} \ &=& \frac{((k-1)!)^2 \, 2^{k} 2k^2}{(2k-1)! 2k(2k+1)} \ &=& F_{k-1} \frac{k}{2k+1} \end{eqnarray}$$ So $$F_0 = 2 ; F_{n} = F_{n-1} \frac{n}{2n+1}$$

A generator expression is actually a special case of a generator that can be written inline. The generator expression

(<expr> for i in <iter>) is shorthand for the generator:def some_gen():

for i in <iter>:

yield <expr>

For example, (n*n for n in count(1)) can be equivalently written as:from itertools import *

def squares():

for n in count(1):

yield n*n

print_for(squares())

1, 4, 9, 16, 25, 36, 49, 64, 81, 100, ...

yield statement acts somewhat like a return in providing its value. But the crucial difference is what happens on the next call. An ordinary function starts off again from the top; a generator starts again directly after the previous yield. This becomes important if the generator includes some internal state: this state is maintained between calls. That is, we can have memory of previous state on the next call. This is particularly useful for recurrence relations, where the current value is expressed in terms of previous values (kept as remembered state).Running total

Theaccumulate() generator provides a running total of its iterator argument. We can write this as an explicit sum:$$ T_N = \sum_{i=1}^N x_i$$ We can instead write this sum as a recurrence relation: $$ T_0 = 0 ; T_n = T_{n-1} + x_n$$ Expanding this out explicitly we get $$T_0 = 0 ; T_1 = T_0 + x_1 = 0 + x_1 = x_1 ; T_2 = T_1 + x_2 = x_1 + x_2 ; \ldots$$ +++T_0+++ is the initial state, which is observed directly. The recurrence term +++T_n+++ tells us what needs to be remembered of the previous state(s), and how to use that to generate the current value.

def running_total(gen):

tot = 0

for x in gen:

tot += x

yield tot

print_for(repeat(3))

print_for(running_total(repeat(3)))

print_for(running_total(count(1)))

3, 3, 3, 3, 3, 3, 3, 3, 3, 3, ...

3, 6, 9, 12, 15, 18, 21, 24, 27, 30, ...

1, 3, 6, 10, 15, 21, 28, 36, 45, 55, ...

Factorial

Similarly we can write the factorial as an explicit product:$$N! = F_N = \prod_{i=1}^N i$$ And we can write it as a recurrence relation: $$ F_1 = 1; F_n = n F_{n-1} $$ +++F_1+++ is the initial state, which should be the first output of the generator. We canyield this directly on the first call, then (on the next call) go into a loop yielding the following values.def fact():

f = 1

yield f

for n in count(2):

f *= n

yield f

print_for(fact())

1, 2, 6, 24, 120, 720, 5040, 40320, 362880, 3628800, ...

yield in the loop. The subsequent call then picks up at that calculation.def fact():

f = 1

for n in count(2):

yield f

f *= n

print_for(fact())

1, 2, 6, 24, 120, 720, 5040, 40320, 362880, 3628800, ...

Fibonacci numbers

The perennially popular Fibonacci numbers are naturally defined using a recurrence relation, involving two previous states:$$F_1 = F_2 = 1 ; F_n = F_{n-1} + F_{n-2}$$ This demonstrates how we can store more state than just the result of the previousyield. def fib(start=(1,1)):

a,b = start

while True:

yield a

a,b = b,a+b

print_for(fib(),20)

print_for(fib((0,3)),20)

1, 1, 2, 3, 5, 8, 13, 21, 34, 55, 89, 144, 233, 377, 610, 987, 1597, 2584, 4181, 6765, ...

0, 3, 3, 6, 9, 15, 24, 39, 63, 102, 165, 267, 432, 699, 1131, 1830, 2961, 4791, 7752,

12543, ...

Logistic map

Difference equations, discrete-time analogues of differential equations, are a form of recurrence relation: the value of the state at the next time step is defined in terms of its value at previous timesteps.The logistic map is a famous difference equation, exhibiting a range of periodic and chaotic behaviours, depending on the value of its paramenter +++r+++. $$x_0 \in (0,1) ; x_{n+1} = r x_n(1-x_n)$$

def logistic_map(r=4):

x = 0.1

while True:

yield x

x = r * x * (1-x)

print_for(logistic_map())

print_for(logistic_map(2))

0.1, 0.36000000000000004, 0.9216, 0.28901376000000006, 0.8219392261226498,

0.5854205387341974, 0.970813326249438, 0.11333924730376121, 0.4019738492975123,

0.9615634951138128, ...

0.1, 0.18000000000000002, 0.2952, 0.41611392, 0.4859262511644672, 0.49960385918742867,

0.49999968614491325, 0.49999999999980305, 0.5, 0.5, ...

%matplotlib inline “magic” allows the plot to display in a Jupyter notebook.)%matplotlib inline

import matplotlib.pyplot as py

py.plot(list(islice(logistic_map(),200)))

py.plot(list(islice(logistic_map(3.5),200)))

from numpy import arange

start = 2.8

stop = 4

step = (stop-start)*0.002

skip = int(1/step) # no of initial vals to skip (converged to attractor)

npts = 150 # no of values to plot per value of lambda

for r in arange(start,stop,step):

yl = logistic_map(r)

list(islice(yl,skip)) # consume first few items

py.scatter(list(islice(repeat(r,npts),npts)), list(islice(yl,npts)), marker='.', s=1)

py.xlim(start,stop)

py.ylim(0,1)

s=1 to get a small point, rather than use a comma marker to get a pixel, because there is currently a bug in the use of pixels in scatter plots.)Faster +++\pi+++

Many series for generating +++\pi+++ converge very slowly. One that converges extremely quickly is:$$ \pi = \sum_{i=0}^\infty \frac{(i!)^2 \, 2^{i+1}}{(2i+1)!}$$We could use generators for each component of the term to code this us as:import operator

def pi_term():

fact = accumulate(count(1), operator.mul)

factsq = (i*i for i in fact)

twoi1 = (2**(i+1) for i in count(1))

def fact2i1():

i,f = 3,6

while True:

yield f

f = f * (i+1) * (i+2)

i += 2

yield 2 # the i=0 term (needed because of 0! = 1 issues)

for i in map(lambda x,y,z: x*y/z, factsq, twoi1, fact2i1()):

yield i

print_for(accumulate(pi_term()),40)

2, 2.6666666666666665, 2.933333333333333, 3.0476190476190474, 3.098412698412698,

3.121500721500721, 3.132156732156732, 3.1371295371295367, 3.1394696806461506,

3.140578169680336, 3.141106021601377, 3.1413584725201353, 3.1414796489611394,

3.1415379931734746, 3.1415661593449467, 3.1415797881375944, 3.1415863960370602,

3.141589605588229, 3.1415911669915006, 3.1415919276751456, 3.1415922987403384,

3.141592479958223, 3.1415925685536337, 3.1415926119088344, 3.1415926331440347,

3.1415926435534467, 3.141592648659951, 3.14159265116678, 3.141592652398205,

3.1415926530034817, 3.1415926533011587, 3.141592653447635, 3.141592653519746,

3.1415926535552634, 3.141592653572765, 3.1415926535813923, 3.141592653585647,

3.141592653587746, 3.141592653588782, 3.141592653589293, ...

import timeit

%time [ i for i in islice(pi_term(),10000) if i < 0 ];

Wall time: 10.9 s

Let $$F_{k-1} = \frac{((k-1)!)^2 \, 2^{k}}{(2k-1)!}$$ Then $$\begin{eqnarray} F_{k} &=& \frac{((k)!)^2 \, 2^{k+1}}{(2k+1)!} \ &=& \frac{((k-1)! k)^2 \, 2 \times 2^{k}}{(2k-1)!(2k)(2k+1)} \ &=& \frac{((k-1)!)^2 \, 2^{k} 2k^2}{(2k-1)! 2k(2k+1)} \ &=& F_{k-1} \frac{k}{2k+1} \end{eqnarray}$$ So $$F_0 = 2 ; F_{n} = F_{n-1} \frac{n}{2n+1}$$

import math

def pi_term_rec():

pt = 2

for n in count(1):

yield pt

pt = pt * n/(2*n+1)

print_for(pi_term_rec(),30)

print_for(accumulate(pi_term_rec()),30)

print(math.pi)

2, 0.6666666666666666, 0.26666666666666666, 0.1142857142857143, 0.0507936507936508,

0.02308802308802309, 0.010656010656010658, 0.004972804972804974, 0.002340143516614105,

0.0011084890341856288, 0.0005278519210407756, 0.0002524509187586318,

0.00012117644100414327, 5.8344212335328244e-05, 2.816617147222743e-05,

1.3628792647851983e-05, 6.607899465625204e-06, 3.209551169017956e-06,

1.561403271414141e-06, 7.606836450479149e-07, 3.710651927063e-07,

1.8121788481005348e-07, 8.85954103515817e-08, 4.335520081034849e-08,

2.123520039690538e-08, 1.0409411959267343e-08, 5.1065039800179424e-09,

2.5068292265542627e-09, 1.2314248832196377e-09, 6.052766375147372e-10, ...

2, 2.6666666666666665, 2.933333333333333, 3.0476190476190474, 3.098412698412698,

3.121500721500721, 3.132156732156732, 3.1371295371295367, 3.1394696806461506,

3.140578169680336, 3.141106021601377, 3.1413584725201353, 3.1414796489611394,

3.1415379931734746, 3.1415661593449467, 3.1415797881375944, 3.1415863960370602,

3.141589605588229, 3.1415911669915006, 3.1415919276751456, 3.1415922987403384,

3.141592479958223, 3.1415925685536337, 3.1415926119088344, 3.1415926331440347,

3.1415926435534467, 3.141592648659951, 3.14159265116678, 3.141592652398205,

3.1415926530034817, ...

3.141592653589793

%time [ i for i in islice(pi_term_rec(),10000) if i < 0 ]

Wall time: 3.98 ms

Sieving for primes

No discussion of generators would be complete without including a prime number generator. The standard algorithm is quite straightforward:

- Generate consecutive whole numbers, and test each for divisibility. If the current number isn’t divisible by anything, yield a new prime.

- Only test for divisibility by primes, and only up to the square root of the number being tested; this requires keeping a record of the primes found so far.

- Optimisation: treat 2 as a special case, and generate and test only the odd numbers.

def primessqrt():

primessofar = []

yield 2

for n in count(3,2): # check odd numbers only, starting with 3

sqrtn = int(math.sqrt(n))

testprimes = takewhile(lambda i: i<=sqrtn, primessofar)

isprime = all(n % p for p in testprimes) # n % p == 0 if n is divisible

if isprime: # if new prime, add to list and yield, else continue

yield n

primessofar.append(n)

print_while(primessqrt(), 200)

2, 3, 5, 7, 11, 13, 17, 19, 23, 29, 31, 37, 41, 43, 47, 53, 59, 61, 67, 71, 73, 79, 83,

89, 97, 101, 103, 107, 109, 113, 127, 131, 137, 139, 149, 151, 157, 163, 167, 173, 179,

181, 191, 193, 197, 199, ...

- Generate consecutive whole numbers, and check whether each one’s dictionary entry has any markers.

- If there are no markers, yield a new prime +++p+++; start a new marker to strike out multiples of +++p+++ (start it at +++p^2+++, for the same reason the previous algorithm only needs to test values up to +++\sqrt n+++).

- If there are markers (such as for 15), the value isn’t prime; move each marker on to the next value it strikes out (so for 15, move the 3 marker on 6 places to strike out 21: each marker is moved on by twice its value, since we are optimising by not considering even values)

- Delete the current dictionary entry (so that the dictionary grows only as the number of primes found so far, rather than as the number of values checked, speeding up dictionary access).

from collections import defaultdict

def primesieve():

yield 2

sieve = defaultdict(set) # dict of n:{divisor} elems

for n in count(3,2): # check odd numbers only

if sieve[n] : # there are divisors, so not prime

for d in sieve[n]: # move the sieve markers on

sieve[n+d].add(d)

else: #set empty, so prime

sieve[n*n].add(2*n)

yield n

# remove current dict item

del sieve[n]

print_for(primesieve(), 100)

2, 3, 5, 7, 11, 13, 17, 19, 23, 29, 31, 37, 41, 43, 47, 53, 59, 61, 67, 71, 73, 79, 83,

89, 97, 101, 103, 107, 109, 113, 127, 131, 137, 139, 149, 151, 157, 163, 167, 173, 179,

181, 191, 193, 197, 199, 211, 223, 227, 229, 233, 239, 241, 251, 257, 263, 269, 271, 277,

281, 283, 293, 307, 311, 313, 317, 331, 337, 347, 349, 353, 359, 367, 373, 379, 383, 389,

397, 401, 409, 419, 421, 431, 433, 439, 443, 449, 457, 461, 463, 467, 479, 487, 491, 499,

503, 509, 521, 523, 541, ...

%time [ i for i in islice(primessqrt(),100000) if i < 0 ]

%time [ i for i in islice(primesieve(),100000) if i < 0 ]

Wall time: 4.69 s

Wall time: 735 ms

Saturday 25 November 2017

then four come along at once

Labels:

education,

research,

university

One of the tasks of an academic is examining research students. This involves reading a thesis, then discussing it with the student in a viva. In the UK the viva is held behind closed doors, and usually involves only two examiners, one internal (same university as the student) and one external. The scheme is different on the continent: the defence is public, following a lecture, and there may be multiple “opponents”, or even an entire panel, of examiners.

If every research student needs (a minimum of) two examiners, that implies for every student I supervise, I should examine at least two others (one as internal, one as external, to keep the numbers balanced). In fact, since many universities like to have “senior” external examiners, I should probably do a few more than that.

Each year, I usually take on one or two new students. So, each year, I should probably examine about three to five students. So far this year I have examined only two: both external continental defences, one in Trondheim in April, one in Lyon in July.

That implies I should have two or three more to do this year (if it is an average year). And sure enough, in November a pile of theses started raining down in a seemingly never-ending stream on my desk.

So the total number is about right, but all at once is a bit of a nightmare. Two internal students, two external students, all to be examined before the end of January. I know what I’m going to be reading over Christmas.

If every research student needs (a minimum of) two examiners, that implies for every student I supervise, I should examine at least two others (one as internal, one as external, to keep the numbers balanced). In fact, since many universities like to have “senior” external examiners, I should probably do a few more than that.

Each year, I usually take on one or two new students. So, each year, I should probably examine about three to five students. So far this year I have examined only two: both external continental defences, one in Trondheim in April, one in Lyon in July.

That implies I should have two or three more to do this year (if it is an average year). And sure enough, in November a pile of theses started raining down in a seemingly never-ending stream on my desk.

So the total number is about right, but all at once is a bit of a nightmare. Two internal students, two external students, all to be examined before the end of January. I know what I’m going to be reading over Christmas.

Thursday 23 November 2017

Fun with Python generator pipelines

When I was first looking at Python generators, I discovered David Beazley’s wonderful presentation on

Generator Tricks for Systems Programmers,

where he gives a lovely example of using generators in a Unix-pipeline fashion,

each generator piping its output into the next.

Before I start using this approach, I’m going to need some debugging help. I like to write-a-line-test-a-line, and I use

We can use

Let’s use some online text files for our test input.

Let’s open the file, and print out the first few lines, to see what we have. (The raw input is binary, so we used

We can do the same by making use of the Python

Next in McIlroy’s pipeline is the

We can use a default dictionary to save us needing special code when a new word is discovered.

The dictionary (175 items) is much smaller than the entire file (1368 words), drastically saving on memory. We can now sort on the dictionary values to get a list of words in frequency order, then slice off the top k of them.

We also see a couple of rather strange ‘words’: s and t. This is because the original assumptions of no apostrophes (and also of no hyphens) doesn’t actually hold for the Great Expectations file, so a more sophisticated definition of a ‘word’ is needed. But that’s another story for another time.

Before I start using this approach, I’m going to need some debugging help. I like to write-a-line-test-a-line, and I use

print() statements a lot

(I was weaned on Fortran IV).

But printing a generator’s content consumes that content,

messing up the following code (unless you comment out and rerun,

which is just nasty).We can use

tee() to build a generator debugging printer.

Tee the generator, print from the first element, and return the second (untouched) element (see my previous post on generators for the definition of print_for()):from itertools import *

def print_debug(gen, n=10, sep=', ', end=", ...\n", enum=False):

gen_tee = tee(gen)

if enum: # add a count to the items

print(*list(islice(enumerate(gen_tee[0],1),n)), sep=sep, end=end)

else:

print(*list(islice(gen_tee[0],n)), sep=sep, end=end)

return gen_tee[1]

counter = count(1)

print_for(counter)

print_for(counter)

counter = count(1)

counter = print_debug(counter)

print_for(counter)

1, 2, 3, 4, 5, 6, 7, 8, 9, 10, ...

11, 12, 13, 14, 15, 16, 17, 18, 19, 20, ...

1, 2, 3, 4, 5, 6, 7, 8, 9, 10, ...

1, 2, 3, 4, 5, 6, 7, 8, 9, 10, ...

Bentley, J., Knuth, D. & McIlroy, D., 1986. Programming Pearls - A Literate Program. CACM, 29(6), pp.471–483.In that paper, the following problem is posed:

Available at: https://www.cs.princeton.edu/courses/archive/spring17/cos333/knuth-mcilroy.pdf.

Given a text file and an integer k, print the k most common words in the file (and the number of their occurrences) in decreasing frequency.This is a deliberately vague problem specification from Bentley. Knuth tightens up the definition, and then gives a several page (42 numbered paragraphs!) literate programming solution in Pascal, including a novel data structure, the trie. McIlroy then critiques Knuth’s solution, and suggests a 6 stage Unix pipeline to do the same job:

accompanied by the following helpful gloss for those unfamiliar with Unix shell arcanae:(1) tr -cs A-Za-z' ' | (2) tr A-Z a-z | (3) sort | (4) uniq -c | (5) sort -rn | (6) sed ${1}q

Using Beazley’s ideas, Python generators can give us a midway between extreme Pascal verbosity and extreme Unix terseness, as follows.

- Make one-word lines by transliterating the complement (

-c) of the alphabet into newlines (note the quoted newline), and squeezing out (-s) multiple newlines.- Transliterate upper case to lower case.

- Sort to bring identical words together.

- Replace each run of duplicate words with a single representative and include a count (

-c).- Sort in reverse (

-r) numeric (-n) order.- Pass through a stream editor; quit (

q) after printing the number of lines designated by the script’s first parameter (${1}).

Let’s use some online text files for our test input.

textlorum = "http://www.sample-videos.com/text/Sample-text-file-10kb.txt"

textgreatexpectations = "http://www.gutenberg.org/files/1400/1400-0.txt"

Let’s open the file, and print out the first few lines, to see what we have. (The raw input is binary, so we used

decode() to convert to strings;

again to do all this properly is a bit more work, but not the point of this discussion.)import urllib.request

infile = urllib.request.urlopen(textlorum)

lines = (line.decode() for line in infile)

# have a peek at the first 3 lines, numbered

lines = print_debug(lines,n=3, sep='\n', end='\n', enum=True)

(1, 'Lorem ipsum dolor sit amet, consectetur adipiscing elit. Vivamus condimentum sagittis

lacus, laoreet luctus ligula laoreet ut. Vestibulum ullamcorper accumsan velit vel

vehicula. Proin tempor lacus arcu. Nunc at elit condimentum, semper nisi et, condimentum

mi. In venenatis blandit nibh at sollicitudin. Vestibulum dapibus mauris at orci maximus

pellentesque. Nullam id elementum ipsum. Suspendisse cursus lobortis viverra. Proin et

erat at mauris tincidunt porttitor vitae ac dui.\r\n')

(2, '\r\n')

(3, 'Donec vulputate lorem tortor, nec fermentum nibh bibendum vel. Lorem ipsum dolor sit

amet, consectetur adipiscing elit. Praesent dictum luctus massa, non euismod lacus.

Pellentesque condimentum dolor est, ut dapibus lectus luctus ac. Ut sagittis commodo arcu.

Integer nisi nulla, facilisis sit amet nulla quis, eleifend suscipit purus. Class aptent

taciti sociosqu ad litora torquent per conubia nostra, per inceptos himenaeos.

Aliquam euismod ultrices lorem, sit amet imperdiet est tincidunt vel. Phasellus dictum

justo sit amet ligula varius aliquet auctor et metus. Fusce vitae tortor et nisi pulvinar

vestibulum eget in risus. Donec ante ex, placerat a lorem eget, ultricies bibendum purus.

Nam sit amet neque non ante laoreet rutrum. Nullam aliquet commodo urna, sed ullamcorper

odio feugiat id. Mauris nisi sapien, porttitor in condimentum nec, venenatis eu urna.

Pellentesque feugiat diam est, at rhoncus orci porttitor non.\r\n')

'\r\n', and paragraphs are separated by blank lines. What the first stage of the McIlroy’s Unix pipeline does is output single word lines, by turning everything that is not a letter (here, only '.,;' and newlines) into newlines, and squashing multiple newlines into single ones.

We can do the same by making use of the Python

re (regular expression) module.

We build a generator that takes in a line of data, and yields the words (defined using a regular expression as any sequence of upper and lower case letter) one at a time, translated to lower case (using lower()).

We then embed that in a second generator, to iterate over all the lines of data. import re

words = (w.group(0).lower() for line in lines for w in re.finditer(r"[a-zA-Z]+", line) )

# have a peek at the first 100 words

words = print_debug(words,100)

lorem, ipsum, dolor, sit, amet, consectetur, adipiscing, elit, vivamus, condimentum,

sagittis, lacus, laoreet, luctus, ligula, laoreet, ut, vestibulum, ullamcorper, accumsan,

velit, vel, vehicula, proin, tempor, lacus, arcu, nunc, at, elit, condimentum, semper,

nisi, et, condimentum, mi, in, venenatis, blandit, nibh, at, sollicitudin, vestibulum,

dapibus, mauris, at, orci, maximus, pellentesque, nullam, id, elementum, ipsum,

suspendisse, cursus, lobortis, viverra, proin, et, erat, at, mauris, tincidunt,

porttitor, vitae, ac, dui, donec, vulputate, lorem, tortor, nec, fermentum, nibh,

bibendum, vel, lorem, ipsum, dolor, sit, amet, consectetur, adipiscing, elit, praesent,

dictum, luctus, massa, non, euismod, lacus, pellentesque, condimentum, dolor, est, ut,

dapibus, lectus, luctus, ac, ...

Next in McIlroy’s pipeline is the

sort. This is not a generator friendly operation, as it needs the entire file of words in memory to be sorted. But we can do the job of the following line,

uniq -c, without having to sort first, by building a dictionary of the occurrences. We still need to read the entire file to do this, but because we iterate over the word generator, we can do this incrementally. So we don’t need to store the entire file in memory, which becomes important if the file is large.

We can use a default dictionary to save us needing special code when a new word is discovered.

from collections import defaultdict

def build_word_dict(word_gen):

# construct and return a dictionary of (word,count) items

wdict = defaultdict(int)

for word in word_gen:

wdict[word] += 1

return wdict

word_dict = build_word_dict(words)

# print words with more than 15 occurrences

print({k:v for k,v in word_dict.items() if v>15})

print('total number of words =', sum([v for k,v in word_dict.items()]))

print('number of unique words =', len(word_dict))

{'sit': 19, 'sed': 25, 'ut': 18, 'tincidunt': 19, 'metus': 16, 'nulla': 21, 'et': 30,

'amet': 19, 'at': 19, 'ipsum': 21, 'non': 21, 'lorem': 21, 'in': 25, 'pellentesque': 17}

total number of words = 1368

number of unique words = 175

The dictionary (175 items) is much smaller than the entire file (1368 words), drastically saving on memory. We can now sort on the dictionary values to get a list of words in frequency order, then slice off the top k of them.

k = 10 # the "integer k" in the spec; k most common words

top_word_keys = sorted(word_dict, key=word_dict.get, reverse=True)

for key in islice(top_word_keys,k):

print(word_dict[key], key)

30 et

25 in

25 sed

21 non

21 lorem

21 nulla

21 ipsum

19 sit

19 tincidunt

19 at

from itertools import *

import urllib.request

import re

from collections import defaultdict

textsource = "http://www.sample-videos.com/text/Sample-text-file-10kb.txt"

textgreatexpectations = "http://www.gutenberg.org/files/1400/1400-0.txt"

k = 20 # the "integer k" in the spec; k most common words

def build_word_dict(word_gen):

# construct and return a dictionary of (word,count) items

wdict = defaultdict(int)

for word in word_gen:

wdict[word] += 1

return wdict

infile = urllib.request.urlopen(textgreatexpectations)

lines = (line.decode() for line in infile)

words = (w.group(0).lower() for line in lines for w in re.finditer(r"[a-zA-Z]+", line) )

word_dict = build_word_dict(words)

top_word_keys = sorted(word_dict, key=word_dict.get, reverse=True)

for key in islice(top_word_keys,k):

print(word_dict[key], key)

8321 the

7166 and

6666 i

5235 to

4557 of

4112 a

3090 that

3083 in

2837 was

2811 it

2382 you

2263 he

2093 had

2070 my

1998 me

1860 his

1807 with

1786 as

1654 at

1432 on

print('total number of words =', sum([v for k,v in word_dict.items()]))

print('number of unique words =', len(word_dict))

total number of words = 192016

number of unique words = 10989

# get full stop word list from https://tinyurl.com/yce6ttyc

stop_words = [ "a", "about", "above", "after", "again", "against", "all", "am", ... ];

word_dict_stopped = { k:v for k,v in word_dict.items() if k not in stop_words }

print('number of stop words =', len(stop_words))

print({k:v for k,v in word_dict_stopped.items() if v>600})

print('total number of words after stop =', sum([v for k,v in word_dict_stopped.items()]))

print('number of unique words after stop =', len(word_dict_stopped))

number of stop words = 153

{'said': 1349, 't': 682, 'no': 655, 'joe': 747, 'not': 1088, 's': 1194, 'mr': 711}

total number of words after stop = 89529

number of unique words after stop = 10870

top_word_keys = sorted(word_dict_stopped, key=word_dict.get, reverse=True)

for key in islice(top_word_keys,k):

print(word_dict[key], key)

1349 said

1194 s

1088 not

747 joe

711 mr

682 t

655 no

514 one

453 now

392 know

383 miss

375 come

374 time

371 little

368 upon

341 pip

327 like

325 looked

321 man

318 havisham

We also see a couple of rather strange ‘words’: s and t. This is because the original assumptions of no apostrophes (and also of no hyphens) doesn’t actually hold for the Great Expectations file, so a more sophisticated definition of a ‘word’ is needed. But that’s another story for another time.

Wednesday 22 November 2017

view from a hotel window

Labels:

Bristol,

complexity

I’m in Bristol, attending a “community consultation workshop” on the Review of Complexity Science as an EPSRC Research Area. I travelled down by train last night, and walked to the hotel. Google maps wanted me to cut across some weird footbridge over a river, and wander through back streets. Since it was 10 pm, pitch dark, and very windy, I thanked it kindly, and walked straight up the well-lit main road.

This is the lovely autumnal view that greeted me this morning:

Now off to talk about complexity science, complex systems, systems thinking, and cross-disciplinarity.

This is the lovely autumnal view that greeted me this morning:

Now off to talk about complexity science, complex systems, systems thinking, and cross-disciplinarity.

Tuesday 21 November 2017

Fun with Python generator expressions

Labels:

mathematics,

python

In the past, I have rhapsodised over Python’s list comprehensions. I had also heard of its generators, but never looked at them seriously. Recently I have been thinking about a problem in stream programming, where I will need the generators’ lazy evaluation. So I have been looking at them in more detail. And discovered that (in Python 3 at least) the list comprehension is just syntactic sugar for a generator expression output turned into a list. That is:

With a list comprehension, I can construct, say, a list of the first 10 square numbers.

The module

Once it’s gone, it’s gone. But what if you want it back? You could always iterate again. But what if the items are expensive to compute? For example, you may be reading a large file, and don’t want to read it all again. Here

[ <expr> for i in <iter> if <cond> ] is just syntactic sugar for list( <expr> for i in <iter> if <cond> ). Let’s look at this in more detail.With a list comprehension, I can construct, say, a list of the first 10 square numbers.

squares = [n*n for n in range(1,11)]

print(squares)

[1, 4, 9, 16, 25, 36, 49, 64, 81, 100]

squares = list(n*n for n in range(1,11))

print(squares)

[1, 4, 9, 16, 25, 36, 49, 64, 81, 100]

range(). A generator can be unbounded.from itertools import *

squares = (n*n for n in count(1))

for s in squares:

print(s, end=", ")

if s >= 100:

print('...')

break

1, 4, 9, 16, 25, 36, 49, 64, 81, 100, ...

count() imported from itertools is a iterable like range() except that it does not have a stop value. It is a generator that just keeps incrementing until you stop asking it for more values.The module

itertools has many such useful functions you can use with potentially infinite generators. For example, we can islice() off the first few items of a potentially unbounded generator, to give a bounded generator that returns only those items. Here we slice off the first 10 values:squares = (n*n for n in count(1))

firstfew = islice(squares,10)

print(list(firstfew))

[1, 4, 9, 16, 25, 36, 49, 64, 81, 100]

takewhile() for this, here to get the squares less than 150.squares = (n*n for n in count(1))

firstfew = takewhile(lambda n: n<=150, squares)

print(list(firstfew))

[1, 4, 9, 16, 25, 36, 49, 64, 81, 100, 121, 144]

islice() and takewhile to define a couple of "pretty print" functions:# print the first n items of the generator, comma separated

def print_for(gen,n=10):

print(*list(islice(gen,n)), sep=', ', end=", ...\n")

# print the generator up to value nmax, comma separated

def print_while(gen,nmax=250):

print(*list(takewhile(lambda n: n<=nmax, gen)), sep=', ', end=", ...\n")

print_for(n*n for n in count(1))

print_while(n*n for n in count(1))

1, 4, 9, 16, 25, 36, 49, 64, 81, 100, ...

1, 4, 9, 16, 25, 36, 49, 64, 81, 100, 121, 144, 169, 196, 225, ...

squares = (n*n for n in count(1))

print_for(squares)

squares = (n*n for n in count(1))

print_while(squares)

1, 4, 9, 16, 25, 36, 49, 64, 81, 100, ...

1, 4, 9, 16, 25, 36, 49, 64, 81, 100, 121, 144, 169, 196, 225, ...

dropwhile(), which drops items until its predicate is True, and filterfalse(), which drops all items for which its predicate is false.print_for(dropwhile(lambda n: n<=5, count(1))) # drop items until they get to 5

print_for(filterfalse(lambda n: n%3, count(1))) # filter out items not divisible by 3

6, 7, 8, 9, 10, 11, 12, 13, 14, 15, ...

3, 6, 9, 12, 15, 18, 21, 24, 27, 30, ...

Restarting generators

I have been quite careful above to keep redefiningsquares. This is because once an item is consumed, it is gone. If I take a generator instance, like squares, and slice off the first ten item, then slice again, I get the next ten items. For example:squares = (n*n for n in count(1))

print_for(squares)

print_for(squares) # continues from next value of same generator

print_for(n*n for n in count(1))

print_for(n*n for n in count(1)) # restarts, with new generator

1, 4, 9, 16, 25, 36, 49, 64, 81, 100, ...

121, 144, 169, 196, 225, 256, 289, 324, 361, 400, ...

1, 4, 9, 16, 25, 36, 49, 64, 81, 100, ...

1, 4, 9, 16, 25, 36, 49, 64, 81, 100, ...

squares = (n*n for n in count(1))

# slice off the first 10?

islice(squares, 10)

print_for(squares) # maybe not what is expected

1, 4, 9, 16, 25, 36, 49, 64, 81, 100, ...

islice() produced a generator. But since nothing accessed that generator, nothing actually got sliced off squares. Compare:squares = (n*n for n in count(1))

# slice off and consume the first 10

list(islice(squares, 10))

print_for(squares)

121, 144, 169, 196, 225, 256, 289, 324, 361, 400, ...

tee() for two

Once it’s gone, it’s gone. But what if you want it back? You could always iterate again. But what if the items are expensive to compute? For example, you may be reading a large file, and don’t want to read it all again. Here tee() is useful for "remembering" earlier items. (Note: this doesn’t make +++n+++ copies of an iterator, rather it gives +++n+++ pointers into a single iterator.)sq_ptr = tee((n*n for n in count(1)))

print_for(sq_ptr[0])

print_for(sq_ptr[0],5)

print_for(sq_ptr[1])

print_for(sq_ptr[1])

print_for(sq_ptr[1],5)

print_for(sq_ptr[0])

1, 4, 9, 16, 25, 36, 49, 64, 81, 100, ...

121, 144, 169, 196, 225, ...

1, 4, 9, 16, 25, 36, 49, 64, 81, 100, ...

121, 144, 169, 196, 225, 256, 289, 324, 361, 400, ...

441, 484, 529, 576, 625, ...

256, 289, 324, 361, 400, 441, 484, 529, 576, 625, ...

def triples(iterable):

a, b, c = tee(iterable,3)

next(b)

list(islice(c,2))

return zip(a, b, c)

print_for(triples(count(1)))

print_for(triples('abcdefghijklmnopqrstuvwxyz'))

(1, 2, 3), (2, 3, 4), (3, 4, 5), (4, 5, 6), (5, 6, 7), (6, 7, 8), (7, 8, 9), (8, 9, 10),

(9, 10, 11), (10, 11, 12), ...

('a', 'b', 'c'), ('b', 'c', 'd'), ('c', 'd', 'e'), ('d', 'e', 'f'), ('e', 'f', 'g'),

('f', 'g', 'h'), ('g', 'h', 'i'), ('h', 'i', 'j'), ('i', 'j', 'k'), ('j', 'k', 'l'), ...

def sliding_window(iterable,n=2):

windows = tee(iterable,n)

for i in range(n):

list(islice(windows[i],i))

return zip(*windows)

print_for(sliding_window(count(1),4))

print_for(sliding_window('abcdefghijklmnopqrstuvwxyz',3))

(1, 2, 3, 4), (2, 3, 4, 5), (3, 4, 5, 6), (4, 5, 6, 7), (5, 6, 7, 8), (6, 7, 8, 9),

(7, 8, 9, 10), (8, 9, 10, 11), (9, 10, 11, 12), (10, 11, 12, 13), ...

('a', 'b', 'c'), ('b', 'c', 'd'), ('c', 'd', 'e'), ('d', 'e', 'f'), ('e', 'f', 'g'),

('f', 'g', 'h'), ('g', 'h', 'i'), ('h', 'i', 'j'), ('i', 'j', 'k'), ('j', 'k', 'l'), ...

Sums and products

Theitertools module has a function accumulate() that takes an iterator, and returns a generator that comprises the sum of the values up to that point. We could use this to implement a simple version of count() "the hard way", by using repeat(), which simply repreats its argument endlessly:print_for(repeat(3))

print_for(accumulate(repeat(1)))

3, 3, 3, 3, 3, 3, 3, 3, 3, 3, ...

1, 2, 3, 4, 5, 6, 7, 8, 9, 10, ...

1, 1+2, 1+2+3, ... We can use count() and accumulate() to generate these:print_for(accumulate(count(1)))

1, 3, 6, 10, 15, 21, 28, 36, 45, 55, ...

accumulate() defaults to summing the items, but other functions can be used.import operator

factorial = accumulate(count(1), operator.mul)

print_for(factorial)

1, 2, 6, 24, 120, 720, 5040, 40320, 362880, 3628800, ...

Arithmetic series and products

We can useaccumulate() to calculate infinite sums and products (or at least, give us the first howevermany terms).sum of reciprocal squares

$$ \sum_{n=1}^\infty \frac{1}{n^2} = \frac{\pi^2}{6} $$import math

recip_squares = (1/(n*n) for n in count(1))

print_for(accumulate(recip_squares))

# or all in one go, now with 30 terms:

print_for(accumulate(1/(n*n) for n in count(1)),30)

# it converges rather slowly

print('limit =', math.pi*math.pi/6)

1.0, 1.25, 1.3611111111111112, 1.4236111111111112, 1.4636111111111112, 1.4913888888888889,

1.511797052154195, 1.527422052154195, 1.5397677311665408, 1.5497677311665408, ...

1.0, 1.25, 1.3611111111111112, 1.4236111111111112, 1.4636111111111112, 1.4913888888888889,

1.511797052154195, 1.527422052154195, 1.5397677311665408, 1.5497677311665408,

1.558032193976458, 1.5649766384209025, 1.5708937981842162, 1.5759958390005426,

1.580440283444987, 1.584346533444987, 1.587806741057444, 1.5908931608105303,

1.5936632439130234, 1.5961632439130233, 1.5984308176091684, 1.6004969333116477,

1.6023872924798896, 1.6041234035910008, 1.6057234035910009, 1.6072026935318293,

1.6085744356443121, 1.6098499458483937, 1.6110390064904865, 1.6121501176015975, ...

limit = 1.6449340668482264

sum of reciprocal powers of 2

$$ \sum_{n=1}^\infty \frac{1}{2^n} = 1 $$print_for(accumulate(1/(2**n) for n in count(1)),20)

0.5, 0.75, 0.875, 0.9375, 0.96875, 0.984375, 0.9921875, 0.99609375, 0.998046875,

0.9990234375, 0.99951171875, 0.999755859375, 0.9998779296875, 0.99993896484375,

0.999969482421875, 0.9999847412109375, 0.9999923706054688, 0.9999961853027344,

0.9999980926513672, 0.9999990463256836, ...

factorial

$$ \prod_{i=1}^n i = n! $$print_for(accumulate((n for n in count(1)), operator.mul))

1, 2, 6, 24, 120, 720, 5040, 40320, 362880, 3628800, ...

a product for +++\pi+++

$$ \prod_{n=1}^\infty \left( \frac{4n^2}{4n^2-1} \right) = \frac{\pi}{2} $$print_for(accumulate((1/(1-1/(4*n**2)) for n in count(1)), operator.mul),20)

# it also converges rather slowly

print('limit =', math.pi/2)

1.3333333333333333, 1.422222222222222, 1.4628571428571429, 1.4860770975056687,

1.5010879772784533, 1.51158509600068, 1.5193368144417092, 1.525294998027755,

1.5300172735634447, 1.533851903321749, 1.5370275801402207, 1.539700671583943,

1.5419817096159192, 1.5439510349155563, 1.5456684442981097, 1.5471793616434646,

1.5485189108743247, 1.549714678373069, 1.5507886317191353, 1.5517584807696163, ...

limit = 1.5707963267948966

Subscribe to:

Posts (Atom)